The evolution of programming languages from the electromechanical 0GL to the advanced 5GL has fundamentally altered human-computer interaction. High-level languages and Low-Code/No-Code platforms have democratized programming, leading to the recent integration of AI tools which challenge traditional programming roles. But now, the confluence of AI with coding practices may not be merely a further incremental change but could represent the inception of a new paradigm in software development, a symbiosis of human creativity and computational efficiency.

The human/computer interaction

How humans program computers has only changed a handful of times in the last 130 years. The first tabulating machine was electromechanical. It was first introduced by Herman Hollerith’s company in 1890, and in fact these business machines put the BM in IBM. They could do limited digital processing on data provided to them via punched cards. An operator would program them with jumper wires and plugs on a pin board, telling the electricity where to flow and thereby which calculations to carry out. Let’s call this programming approach the Zeroth Generation Language, or 0GL.

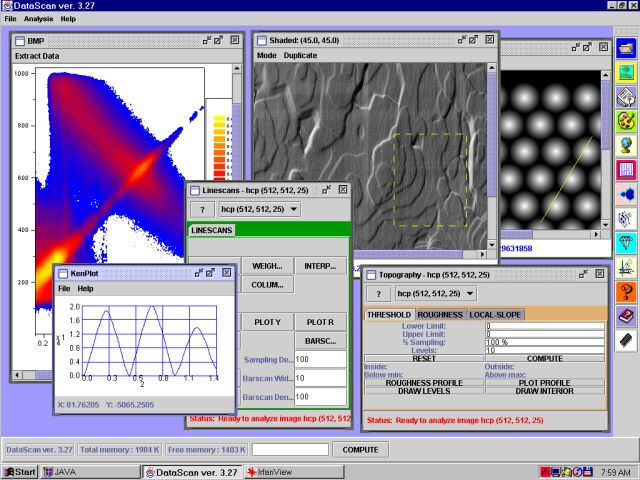

The first large computers that followed borrowed Joseph Jacquard’s loom approach from 1803, using a defined instruction set encoded by ones and zeros; these were the First Generation Languages (1GL). Often they were implemented by giant roles of black tape with holes, a technology dating back to Basile Bouchon in 1725. The computing power was limited, but the only limit to the size of your application was how much tape your roles could hold.

The Second Generation (2GL) assembly languages increased human usability by replacing 0’s and 1’s with symbolic names. But in fact this was a small paradigm change, because these languages were just as tied to their hardware as were the wires in the tabulating machines 50 years before them.

The next great jump was FORTRAN (in 1957) and COBOL (in 1959). These languages were more human-readable than assembly, but that was not the key point. The key point was abstraction, achieved via machine-dependent compilers, so that one FORTRAN or COBOL application would presumably give the same answers on any machine on which it was run.

The transition to Fourth Generation Languages (4GL) was all about a leap in usability. Invented around 1970, SQL is the most notable example, using a human-like syntax: you tell it what you want, and it figures out how to get it. Despite its age it’s never been replaced and remains the gold standard for interacting with relational databases today.

Many computer scientists argue that the newest Low-Code/No-Code programming environments, such as Microsoft PowerApps, are the latest addition to the 4GL cadre since they similarly require little knowledge of traditional programming structures. This paradigm is exploding in popularity and transforming the enterprise IT landscape: business users (not IT professionals) create ephemeral applications to solve specific and often short-term business problems. But how ironic that with their GUIs and controls and connectors, they are the modern digital equivalents of the 0GL tabulating machine pin boards from 130 years ago!

Some people have argued there are now Fifth Generation Languages (5GL), used for artificial intelligence and machine learning, where the focus is on the results expected, not on how to achieve them.

From coding by hand to AI collaboration

The evolution of 0GL to 5GL is all about the leaps in how humans interact with machines. But not unsurprisingly, the advent of ChatGPT (and its cousins like Bard and GitHub Co-Pilot) has brought about a new paradigm in how we develop applications. As the new generation of college computer science students now well know, you don’t have to write your own Java/PHP/Python… code anymore; instead, you can ask ChatGPT to write it for you. Or for example, you can feed ChatGPT buggy code or code lacking in quality, and ask it to remedy the situation, or to create the tests and documentation. To be sure, there are limits, and a good human understanding of the language is essential to avoid errors and ensure you get the results you want. But the technology is advancing rapidly, its limits are contracting, and the degree of user-needed corrections shrinks every day.

If we project this situation forward – even just a bit – its ludicrousness becomes self-evident: humans asking AIs to create human-readable code for humans that no longer need to read the code! This paradox underscores a new era where the traditional roles of human programmers are not just assisted but fundamentally altered by artificial intelligence; it marks a significant evolution in computational development.

With Artificial intelligence now a key player in the realm of code creation, we need to examine its repercussions on this craft. This present state may be the start of a larger change, where artificial intelligence becomes a collaborative partner in code creation and the relationship between developer and programming tool is increasingly indistinct – in other words, a symbiosis of human creativity and computational power.