I’ve been lucky enough to build many big things in my life: huge experiments that took years to complete, an organic crystal growth laboratory, and large organizations that transformed companies. And I’ve learned this much: if what you’re building is big enough, then there are some key best practices to keep in mind.

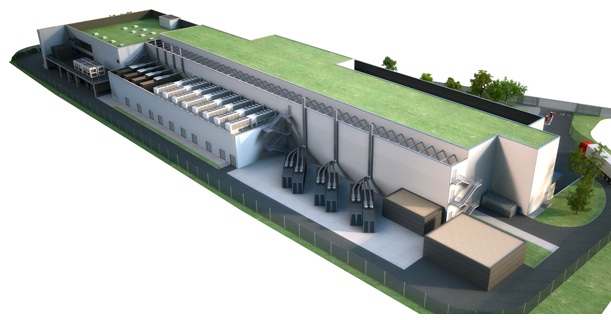

Recently, I had a once-in-a-lifetime chance to be involved with the creation of a “Private Cloud” for a large multinational corporation, housed in two ultra-modern, Tier III/IV co-located data centers, and including state-of-the-art technology and products. Naturally (as with most business topics) many details are confidential and I cannot share them.

However, some “best practices for building big things” are quite generic to any large project.

Best Practice 1 of 4: “We are all too dumb to understand the end-state”

My Ph.D. thesis advisor taught me: if what you are building is big enough and complex enough, then we are all too dumb — before it is built — to understand today the fine details of running and optimizing it. Translation: before you have built something big, be careful about long term decisions with long term impacts, such as staffing and processes. Best to keep things flexible and adaptable, and learn as you go. I was given plenty of advice about the various types of system administrators we should hire, or the business processes to implement for documenting the services – and I think I frustrated many people for not making early a few decisions. But in retrospect, I was glad I never committed to such “guesses with long-term consequences.” Reality turned out a bit different than we expected, and it was only after we garnered some first experiences that we clearly understood the best direction to take.

Best Practice 2 of 4: “Numbered releases”

You may get a surprising amount of negative stakeholder feedback along the lines of “When will it be completed?” However, a big data center may never be completed! You’ll be designing and adding new services the business needs, retiring old ones the business no longer needs; and if you were smart, you may have underinvested in the procuring the hardware capacity you’ll ultimately need, to add what you need no earlier than you need it — that business decision alone, while wise, can cause no end of frustration to some stakeholders! Key stakeholders were getting frustrated – and from their perspective, it looked to them like a project that might never end.

My good friend and project manager Urs Schwarz proposed a simple solution: we communicated the data center construction plan as a series of numbered releases, each scheduled for a specific release date: 0.9, 1.0 (operational readiness for internal users), 1.1, 1.2 (operational readiness for end-users), 1.3 (service XYZ available), etc. This gave a far better (and far more easy to communicate) insight into the building phase than any other approach we tried.

Best Practice 3 of 4: “Stabilization phase”

Things take time to break in. Whether it is a huge data center or even a new automobile, don’t forget you’ll need to plan a “stabilization phase” whose length is proportional to the build time and complexity. The challenge here is not knowing that this phase is needed; this is elementary knowledge that any engineer (be it civil, mechanical, electrical, or IT) learns in school. The challenge is communicating that fact to key stakeholders that don’t understand this. Unfortunately, I never learned any best-practices about the best way to do this.

Best Practice 4 of 4: Failure is good!

During the stabilization phase, my wish was simple – but this, too, confused stakeholders: I wanted any system that could fail, to fail. And when systems did fail, I was happy – and I was only unhappy when I thought too few systems were failing compared to my instincts about what should go wrong.

It’s a different story for production systems like airplanes or medical devices, but for one-off endeavors where you are also trying not to spend more money than you must, what I’ve learned is: you can invest huge amounts in business continuity and so-called safety factors, but all this may be an (expensive) theoretical exercise, until a system actually fails. It is only by seeing real systems fail that you learn important things about how to prevent it from happening again. So even today: where systems have not yet failed, I have no idea about their MTBF. But where the systems have failed, I feel much more confident we’ve built in the needed protections to give us the availability we want.